Features

The hyper-box brain toolbox has the following main characteristics:

Types of input variables

The hyperbox-brain library separates learning models for continuous variables only and mixed-attribute data.

Incremental learning

Incremental (online) learning models are created incrementally and are updated continuously. They are appropriate for big data applications where real-time response is an important requirement. These learning models generate a new hyperbox or expand an existing hyperbox to cover each incoming input pattern.

Agglomerative learning

Agglomerative (batch) learning models are trained using all training data available at the training time. They use the aggregation of existing hyperboxes to form new larger sized hyperboxes based on the similarity measures among hyperboxes.

Ensemble learning

Ensemble models in the hyperbox-brain toolbox build a set of hyperbox-based learners from a subset of training samples or a subset of both training samples and features. Training subsets of base learners can be formed by stratified random subsampling, resampling, or class-balanced random subsampling. The final predicted results of an ensemble model are an aggregation of predictions from all base learners based on a majority voting mechanism. An intersting characteristic of hyperbox-based models is resulting hyperboxes from all base learners or decision trees can be merged to formulate a single model. This contributes to increasing the explainability of the estimator while still taking advantage of strong points of ensemble models.

Multigranularity learning

Multi-granularity learning algorithms can construct classifiers from multiresolution hierarchical granular representations using hyperbox fuzzy sets. This algorithm forms a series of granular inferences hierarchically through many levels of abstraction. An attractive characteristic of these classifiers is that they can maintain a high accuracy in comparison to other fuzzy min-max models at a low degree of granularity based on reusing the knowledge learned from lower levels of abstraction.

Learning from both labelled and unlabelled data

One of the exciting features of learning algorithms for the general fuzzy min-max neural network is the capability of creating classification boundaries among known classes and clustering data and representing them as hyperboxes in the case that labels are not available. Unlabelled hyperboxes is then possible to be labelled on the basis of the evidence of next incoming input samples. As a result, the GFMMNN models have the ability to learn from the mixed labelled and unlabelled datasets in a native way.

Ability to directly process missing data

Learning algorithms for the general fuzzy min-max neural network supported by the library may classify inputs with missing data directly without the need for replacing or imputing missing values as in other classifiers.

Continual learning ability of new classes

Incremental learning algorithms of hyperbox-based models in the hyperbox-brain library can grow and accommodate new classes of data without retraining the whole classifier. Incremental learning algorithms themselves can generate new hyperboxes to represent clusters of new data with potentially new class labels both in the middle of normal training procedure and in the operating time where training has been finished. This property is a key feature for smart life-long learning systems.

Data editing and pruning approaches

By combining the repeated cross-validation methods provided by scikit-learn and hyperbox-based learning algorithms, evidence from training multiple models can be deployed for identifying which data points from the original training set or the hyperboxes from the generated multiple models should be retained and those that should be edited out or pruned before further processing.

Scikit-learn compatible estimators

The estimators in hyperbox-brain is compatible with the well-known scikit-learn toolbox. Therefore, it is possible to use hyperbox-based estimators in scikit-learn pipelines, scikit-learn hyperparameter optimizers (e.g., grid search and random search), and scikit-learn model validation (e.g., cross-validation scores). In addition, the hyperbox-brain toolbox can be used within hyperparameter optimisation libraries built on top of scikit-learn such as hyperopt.

Explainability of predicted results

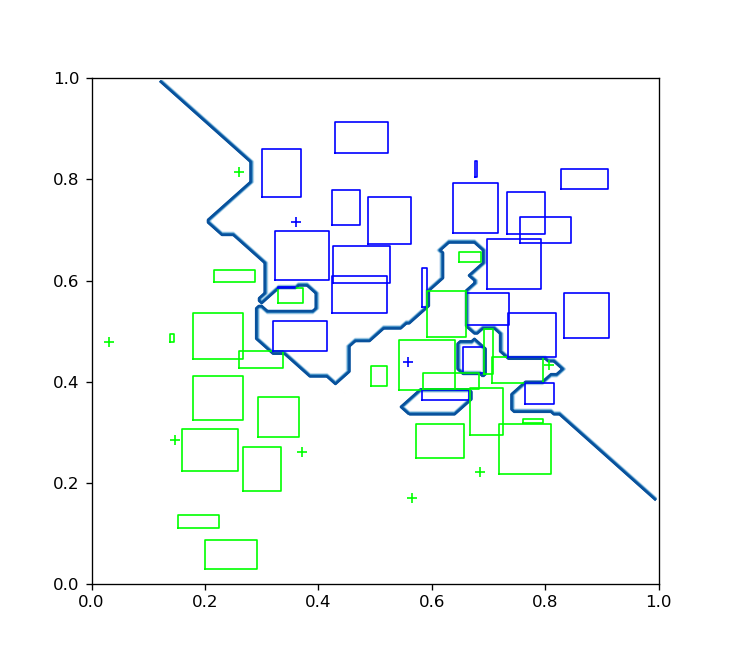

The hyperbox-brain library can provide the explanation of predicted results via visualisation. This toolbox provides the visualisation of existing hyperboxes and the decision boundaries of a trained hyperbox-based model if input features are two-dimensional features:

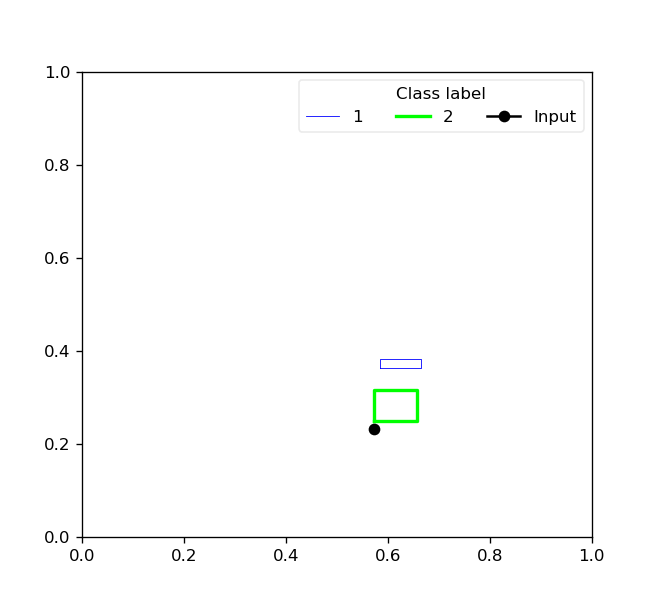

For two-dimensional data, the toolbox also provides the reason behind the class prediction for each input sample by showing representative hyperboxes for each class which join the prediction process of the trained model for an given input pattern:

For input patterns with two or more dimensions, the hyperbox-brain toolbox uses a parallel coordinates graph to display representative hyperboxes for each class which join the prediction process of the trained model for an given input pattern:

Easy to use

Hyperbox-brain is designed for users with any experience level. Learning models are easy to create, setup, and run. Existing methods are easy to modify and extend.

Jupyter notebooks

The learning models in the hyperbox-brain toolbox can be easily retrieved in notebooks in the Jupyter or JupyterLab environments.

In order to display plots from hyperbox-brain within a Jupyter Notebook we need to define the proper mathplotlib backend to use. This can be performed by including the following magic command at the beginning of the Notebook:

%matplotlib notebook

JupyterLab is the next-generation user interface for Jupyter, and it may display interactive plots with some caveats. If you use JupyterLab then the current solution is to use the jupyter-matplotlib extension:

%matplotlib widget

Examples regarding how to use the classes and functions in the hyperbox-brain toolbox have been written under the form of Jupyter notebooks.